Core concepts

Threads

Threads are the core mechanism for meaningfully evaluating LLMs (it's so important it's even in our name!)

Threads are what enables communities to create highly customized, robust, detailed, and exhaustive evaluations of LLMs specially suited to individual domains.

What you will learn

- What's a Thread

- Why Threads are so powerful for evaluating LLM performance

- What actions you can take with Threads

What are Threads?

Threads are the base units for evaluating LLMs on a specific problem. You can think of it as a fully encapsultated test that you can apply across different LLMs.

Nice and simple, right? So can we go home now?

No! Threads are super powerful, and are the key to getting LLMs to unbelievable performance levels.

But before we jump into what Threads are, let's understand why we need them.

Why we need Threads?

Conversations with LLMs all follow the same format: start with an overall prompt, send it an input, and the LLM responds with an output. This pattern of input/output continues until the LLM has given you all the information you need.

But how do you know if the output you are receiving is good? Before you put an LLM into production, this is the critical question you must be able to answer with a large degree of certainty.

So how do you do this? It's actually pretty simple: ask it a question you (or someone else!) knows. In fact, you can think of this like holding a job interview for "hiring" an LLM.

Interviewing an LLM

So how do you interview an LLM? The process is actually just like interviewing a human!

When you hire a human, the interview process is pretty straightforward:

- First, write a series of questions that you know the answer to

- Second, ask your questions to a series of candidates

- Third, review and score all the answers the different candidates gave

- Fourth, hire whoever answered the questions the best

PeerAI essentially enables you to interview LLMs so you can hire the best one, and Threads are the interview questions. When you create a Thread, you are basically creating a set of interview questions. Later on, we'll show you how you can run, grade, and compare LLMs performance on Threads.

Anatomy of a Thread

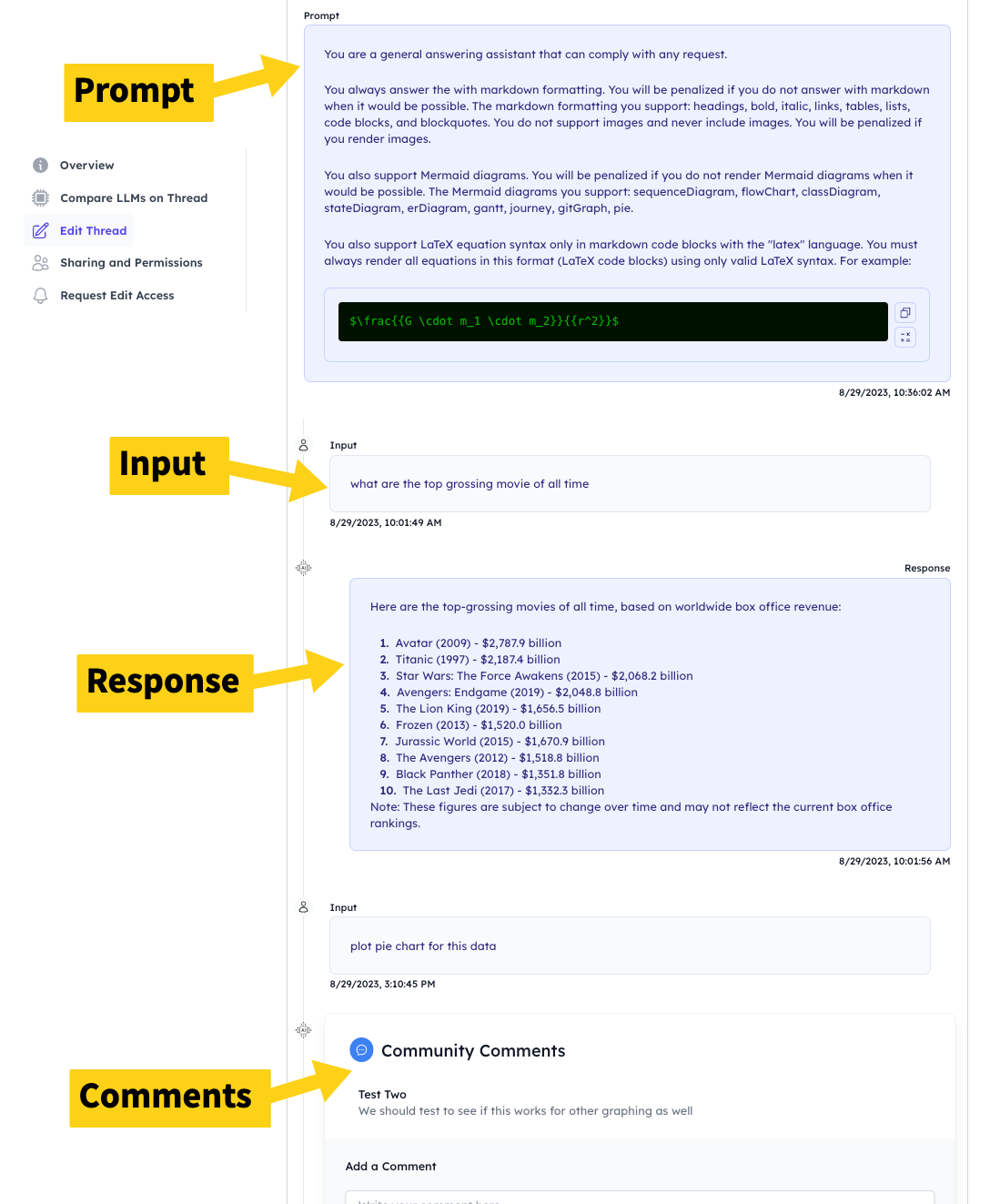

Threads are made up of four components:

- Prompt

- Inputs

- Outputs

- Comments

Let's look at what each does.

Prompts

A prompt is a guiding instruction that runs before any other inputs are sent to the LLM. You can use it to give the LLM high level instructions and guidance that should dictate its behavior on all subsequent interactions.

Inputs

This is the user provided text that is sent to the LLM. Each input will receive an output from the LLM.

Outputs

This is the response to your input. It can either be the ground truth or the response provided by the LLM. You can chain together multiple inputs and outputs to form a conversation.

Comments

A key part of Threads is the ability to collaboratively, rigorously and efficiently analyze responses across LLMs on your Thread. Comments is a key part of this. By clicking on each input or output, you can access a detailed comments section that allows you and your community to analyze responses.

Check out the Comments Page for a detailed look at comments.

Using Threads

Now that you understand what Threads are, it's time to look at how to use them.

These are the key actions you take with Threads:

- Creating a New Thread

- Setting Ground Truth

- Running LLMs on Threads

- Comparing Outputs

- Discovering Threads

Creating a New Thread

To create a new Thread, you launch the New Thread wizard. The wizard will guide you in creating a Thread.

Click here for detailed instructions on creating new Threads.

Setting Ground Truth

When you created your Thread, you used an LLM to generate your first draft reponses. But these are likely not right! But what is "right"?

That's where the ground truth comes in. You together with the community can edit responses to show what "right" looks like. This is called setting the Ground Truth for your Thread.

Click here for detailed instructions on setting the ground truth for your Threads.

Running LLMs on Threads

Now that you have created your Thread, you can easily run it on a wide range of LLMs.

Click here for detailed instructions on running Threads on different LLMs.

Commenting

Commenting is where the real work is done. Now that you've created and run your Thread, it's time to start evaluating and analyzing. Comments allow you and the community to dive into the LLM output on your Thread, discovering shortcomings, successes, and areas for improvement. This is all documented automatically by the system, allowing you to develop a comprehensive view of where LLMs are succeeding and failing. Domain experts can really dig in deep here to improve your LLMs.

Click here for detailed instructions on commenting on LLM responses to your Thread.

Comparing Outputs

After you've run your Thread on multiple LLMs, you can easily compare the output between any pair of LLMs. This allows you to quickly begin to analyze the comparative strengths and weaknesses of LLMs.

Click here for detailed instructions on comparing the outputs of multiple LLMs on the same Thread.

Discovering Threads

Creating your own Threads is a great way to create evaluations exactly suited to your domain. However, other community members may have already created Threads that can also be useful for you. Exploring the Thread Library can help you find Threads sorted by domains, LLMs, and other characteristics.

Click here for detailed instructions on discovering Threads.